반응형

컴퓨터 비전(Computer Vision)은 머신러닝과 딥러닝을 활용하여 이미지나 동영상을 분석하는 기술입니다. 이번 글에서는 이미지 처리의 기본 개념과 합성곱 신경망(CNN)의 원리를 이해하고, Python을 활용한 CNN 기반의 이미지 분류 실습을 진행해 보겠습니다. 또한 객체 탐지 및 이미지 세그멘테이션 프로젝트 아이디어도 소개합니다.

[ 엔카코 ]

1. 컴퓨터 비전 이론

1.1 이미지 처리 기본 개념

컴퓨터 비전에서 이미지 처리는 다양한 기법을 활용하여 의미 있는 정보를 추출하는 과정입니다. 주요 개념은 다음과 같습니다.

- 픽셀(Pixel): 이미지의 최소 단위로, RGB 값(컬러 이미지) 또는 그레이스케일 값(흑백 이미지)으로 표현됩니다.

- 필터(Filter): 특정 패턴을 강조하거나 잡음을 제거하는 데 사용됩니다.

- 컨볼루션(Convolution): 필터를 적용하여 이미지의 특징을 추출하는 연산입니다.

- 에지 검출(Edge Detection): 이미지 내에서 경계를 감지하는 기법입니다.

1.2 합성곱 신경망(CNN) 이해

CNN(Convolutional Neural Network)은 이미지 인식을 위한 대표적인 딥러닝 모델입니다. 주요 구성 요소는 다음과 같습니다.

- 합성곱 계층(Convolutional Layer): 이미지에서 특징을 추출하는 역할을 합니다.

- 풀링 계층(Pooling Layer): 특징 맵의 크기를 줄여 연산량을 감소시키고, 중요한 정보를 유지합니다.

- 완전 연결 계층(Fully Connected Layer): 추출된 특징을 바탕으로 최종 분류를 수행합니다.

CNN은 이러한 계층들을 순차적으로 연결하여 이미지의 복잡한 패턴을 효과적으로 학습할 수 있도록 합니다.

2. 실습: CNN을 이용한 이미지 분류

아래 예제에서는 Python의 TensorFlow와 Keras 라이브러리를 활용하여 MNIST 데이터셋을 분류하는 CNN 모델을 구현해 보겠습니다.

2.1 예제 코드

# winget으로 설치 (Windows 11/10)

winget install Python.Python.3.10

# py launcher 설치

pip install py

# 설치된 모든 Python 버전 확인

py --list

# Python 3.10으로 실행

py -3.10

# pip 사용할 때

py -3.10 -m pip install tensorflowimport tensorflow as tf

from tensorflow.keras import datasets, layers, models

import matplotlib.pyplot as plt

import matplotlib.font_manager as fm

# 한글 폰트 설정

plt.rcParams['font.family'] = 'Malgun Gothic' # Windows

# plt.rcParams['font.family'] = 'AppleGothic' # Mac

plt.rcParams['axes.unicode_minus'] = False # 마이너스 기호 깨짐 방지

# MNIST 데이터셋 로드 및 전처리

(train_images, train_labels), (test_images, test_labels) = datasets.mnist.load_data()

# 데이터 차원 확장 및 정규화 (CNN은 4D 텐서를 입력으로 받음: (샘플 수, 높이, 너비, 채널))

train_images = train_images.reshape((60000, 28, 28, 1)).astype('float32') / 255.0

test_images = test_images.reshape((10000, 28, 28, 1)).astype('float32') / 255.0

# 모델 구성

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dense(10, activation='softmax') # 10개의 클래스 (숫자 0-9)

])

# 모델 컴파일

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# 모델 요약 출력

model.summary()

# 모델 학습

history = model.fit(train_images, train_labels, epochs=5,

validation_data=(test_images, test_labels))

# 모델 평가

test_loss, test_acc = model.evaluate(test_images, test_labels)

print(f"테스트 정확도: {test_acc:.4f}")

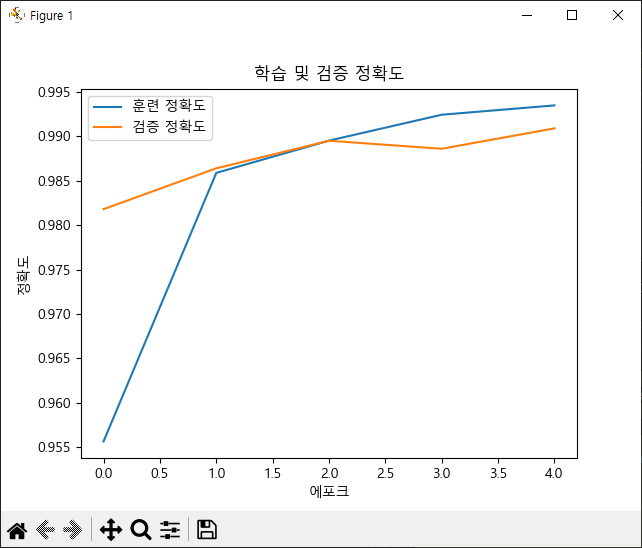

# 학습 과정 시각화

plt.plot(history.history['accuracy'], label='훈련 정확도')

plt.plot(history.history['val_accuracy'], label='검증 정확도')

plt.xlabel('에포크')

plt.ylabel('정확도')

plt.legend()

plt.title('학습 및 검증 정확도')

plt.show()2.2 코드 설명

- 데이터 전처리: MNIST 데이터셋을 불러와 정규화하고 CNN 입력 형태로 변환합니다.

- CNN 모델 구성: 세 개의 합성곱 계층과 풀링 계층을 포함한 간단한 CNN을 생성합니다.

- 모델 학습 및 평가: 5번의 에포크 동안 학습을 진행하고, 테스트 데이터에서 정확도를 측정합니다.

- 시각화: 훈련 정확도와 검증 정확도를 플롯하여 학습 과정을 분석합니다.

3. 프로젝트: 객체 탐지 또는 이미지 세그멘테이션 애플리케이션 개발

CNN을 활용하여 보다 발전된 컴퓨터 비전 프로젝트를 개발할 수 있습니다.

3.1 객체 탐지(Object Detection)

객체 탐지는 이미지 내의 여러 객체를 탐지하고, 위치를 예측하는 기술입니다. 대표적인 모델은 다음과 같습니다.

- YOLO(You Only Look Once)

- SSD(Single Shot MultiBox Detector)

- Faster R-CNN

개발 아이디어

- 실시간 CCTV 영상에서 사람 또는 차량 탐지

- 스마트 팩토리에서 결함 제품 자동 감지 시스템 구축

3.2 이미지 세그멘테이션(Image Segmentation)

이미지 세그멘테이션은 픽셀 단위로 객체를 구분하는 기술로, 주요 모델은 다음과 같습니다.

- U-Net: 의료 영상 분석에서 많이 사용됨

- Mask R-CNN: 객체 탐지와 픽셀 단위 분할을 동시에 수행

개발 아이디어

- 의료 영상에서 암세포 자동 탐지

- 자율주행 차량을 위한 도로 및 차선 인식

from ultralytics import YOLO

import cv2

import numpy as np

import torch

from ultralytics.nn.tasks import DetectionModel

from torch.nn.modules.container import Sequential

from ultralytics.nn.modules import Conv

# YOLO 모델 로드 전에 안전한 글로벌 설정 추가

torch.serialization.add_safe_globals([DetectionModel, Sequential, Conv])

# 원본 torch.load 함수 저장

_original_load = torch.load

# torch.load 재정의

def custom_load(f, *args, **kwargs):

kwargs['weights_only'] = False

return _original_load(f, *args, **kwargs)

torch.load = custom_load

def preprocess_image(img):

# 이미지 크기 최적화

img = cv2.resize(img, (640, 640))

return img

def detect_vehicles(image_path):

# YOLO 모델 로드 및 최적화

model = YOLO('yolov8n.pt')

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = model.to(device)

if device == 'cuda':

model = model.half()

# 이미지 로드 및 전처리

img = cv2.imread(image_path)

if img is None:

raise ValueError("이미지를 불러올 수 없습니다.")

# 원본 이미지 크기 유지

original_img = img.copy()

# 객체 검출 수행

results = model(img, conf=0.3, iou=0.45)

# 검출 대상 클래스 ID (COCO dataset 기준)

target_classes = {

0: 'person', # 사람

2: 'car', # 자동차

3: 'motorcycle', # 오토바이

5: 'bus', # 버스

7: 'truck' # 트럭

}

detection_count = {'person': 0, 'vehicle': 0}

detected_boxes = []

# 검출된 객체에 대해 처리

for result in results:

boxes = result.boxes

for box in boxes:

cls = int(box.cls[0])

conf = float(box.conf[0])

# 대상 클래스만 처리

if cls in target_classes:

# 중복 검출 방지를 위한 IoU 체크

current_box = box.xyxy[0].cpu().numpy()

is_duplicate = False

for detected_box in detected_boxes:

iou = calculate_iou(current_box, detected_box)

if iou > 0.45:

is_duplicate = True

break

if not is_duplicate:

# 사람과 차량 카운트 분리

if cls == 0:

detection_count['person'] += 1

color = (0, 255, 0) # 초록색 (사람)

else:

detection_count['vehicle'] += 1

color = (0, 0, 255) # 빨간색 (차량)

detected_boxes.append(current_box)

# 바운딩 박스 그리기

x1, y1, x2, y2 = map(int, current_box)

cv2.rectangle(original_img, (x1, y1), (x2, y2), color, 2)

# 클래스와 신뢰도 표시

label = f"{target_classes[cls]}: {conf:.2f}"

cv2.putText(original_img, label, (x1, y1-10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, color, 2)

print(f"검출된 사람 수: {detection_count['person']}")

print(f"검출된 차량 수: {detection_count['vehicle']}")

return original_img, detection_count

def calculate_iou(box1, box2):

# IoU 계산 함수

x1 = max(box1[0], box2[0])

y1 = max(box1[1], box2[1])

x2 = min(box1[2], box2[2])

y2 = min(box1[3], box2[3])

intersection = max(0, x2 - x1) * max(0, y2 - y1)

box1_area = (box1[2] - box1[0]) * (box1[3] - box1[1])

box2_area = (box2[2] - box2[0]) * (box2[3] - box2[1])

union = box1_area + box2_area - intersection

return intersection / union if union > 0 else 0

def save_result(img, output_path):

cv2.imwrite(output_path, img)

print(f"결과가 {output_path}에 저장되었습니다.")

if __name__ == "__main__":

# 이미지 경로 설정

input_image = "car.jpg" # 분석할 이미지 경로

output_image = "result2.jpg" # 결과 이미지 저장 경로

# 객체 검출 수행

result_img, counts = detect_vehicles(input_image)

# 결과 저장

save_result(result_img, output_image)

이번 포스트에서는 컴퓨터 비전의 개념과 CNN의 원리를 이해하고, Python을 활용하여 CNN 기반의 이미지 분류 실습을 진행했습니다. 이를 바탕으로 객체 탐지 및 이미지 세그멘테이션과 같은 고급 응용 프로젝트도 개발할 수 있습니다.

컴퓨터 비전은 다양한 산업에서 활용될 수 있는 강력한 기술입니다. 여러분도 직접 CNN을 활용한 프로젝트를 진행해 보면서 더 깊이 있는 경험을 쌓아보시길 바랍니다.

질문이나 의견이 있으시면 댓글로 남겨주세요! 😊

반응형

'전문성은 무엇으로 만들어지는가 🎓 > 이론과 실습으로 배우는 AI 입문 🤖' 카테고리의 다른 글

| 4. 딥러닝 입문 (1) | 2025.01.14 |

|---|---|

| 3. 머신러닝 기초 (5) | 2025.01.05 |

| 2. 파이썬 프로그래밍과 데이터 처리 (7) | 2024.12.12 |

| 1. 인공지능 기초와 아이디어 (3) | 2024.12.09 |

| 0. 인공지능 입문 커리큘럼 작성하기 (9) | 2024.12.03 |